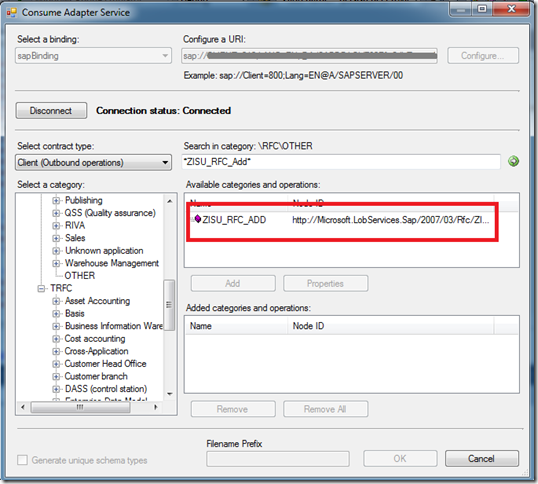

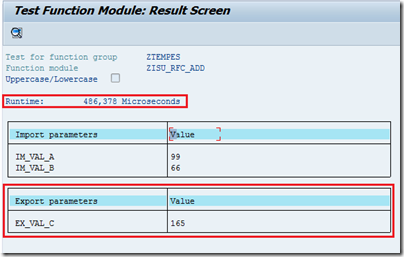

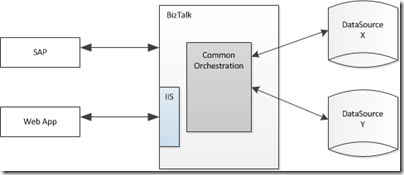

In my previous post, we discussed some of the reasons why BizTalk and SignalR may complement themselves in some situations. I will now walk through the implementation of this OMS scenario.

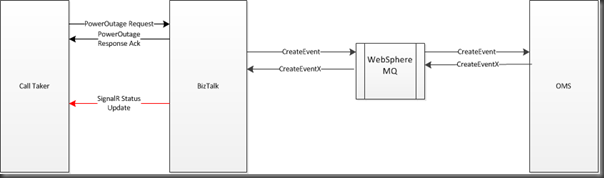

I am going to create a Call Taker Web application that will communicate with a BizTalk exposed WCF service. Once BizTalk receives the request message, we will send a response acknowledgement message back to the Call Taker Web application. BizTalk will then communicate with OMS system. “In real life” this will involve Websphere MQ, but for the purpose of this blog post I am simply going to use the FILE Adapter and a folder that will act as my queue. Once we have finished communicating with OMS, we want to send an update status message to the Call Taker application using SignalR. In this information we will include the Estimated Time of Restore(ETR) for the customer who has called in.![image image]()

The Bits

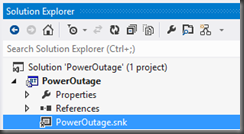

Other than a base BizTalk install, we are going to need the SignalR bits. Like in most cases, NuGet if your friend. However, as you probably know, BizTalk requires any “helper” assemblies to be in the GAC. We need to sign the SignalR.Client assembly with a Strong Name key. To get around this I suggest you download the source from here. You only need to do this for the SignalR.Client assembly.

The Solution

There are really 3 projects that make up this solution:

![image image]()

Let’s start with the BizTalk application since we are going to need to expose a WCF Service that the Web Application is going to consume.

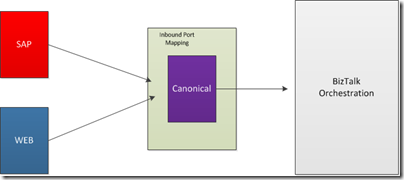

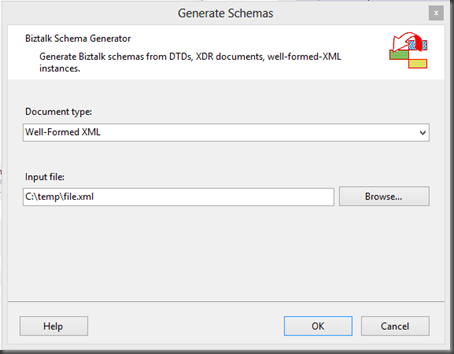

In total we are going to need 4 schemas:

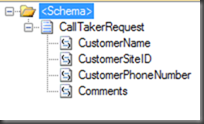

- CallTakerRequest – This schema will be exposed to our Web Application as a WCF Service. In this message we want to capture customer details.

![image image]()

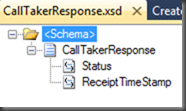

- CallTakerResponse – This will be our acknowledgement message that we will send back to the WCF client. The purpose is to provide the Web Application with assurance that we have received the request message successfully and that we “promise” to process it

![image image]()

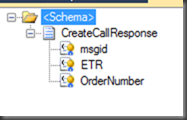

- CreateCallRequest – This message will be sent to our OMS system. Also note the msgid field which has a promoted property. Since we are going to use correlation to tie the CreateCallRequest and CreateCallResponse messages together, we will use this field to bind the messages.

![image image]()

- CreateCallResponse – When our OMS system responds back to BizTalk, it will include the same msgid as the field that was included in the request. This field will also be promoted. The other two elements(ETR and OrderNumber) we distinguish them so that we can pass them off to the SignalR Helper easily.

![image image]()

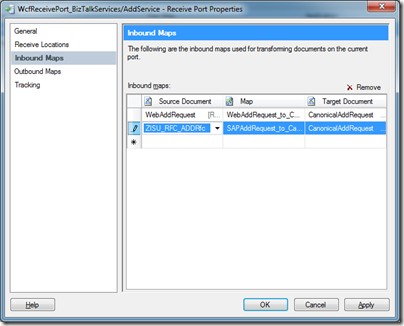

We will also need two maps:

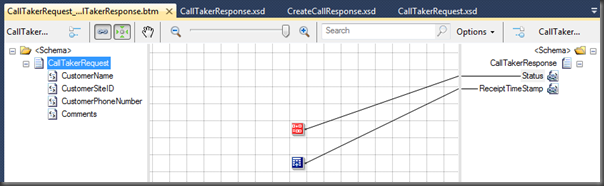

- CallTakerRequest_to_CallTakerResponse – The purpose of this map is to generate a response that we can send to the Web Client. We will simply use a couple functoids to set a status of “True” and provide a timestamp.

![image image]()

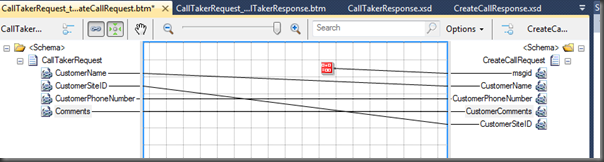

- CallTakerRequest_to_CreateCallRequest – This map will take our request message from our Web App and then transform it into an instance of our OMS Create Call message. For the msgid, I am simply hardcoding a value here to make my testing easier. In real life you need to ensure you have a unique value.

![image image]()

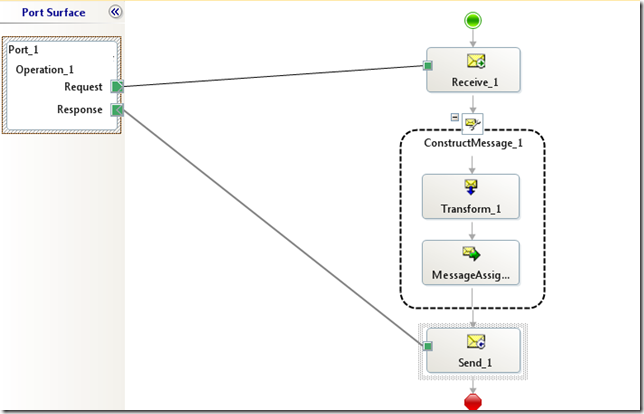

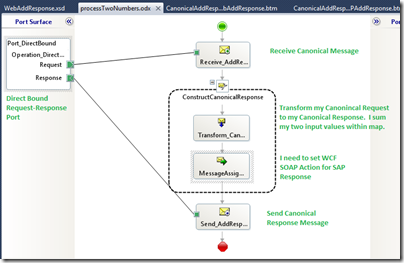

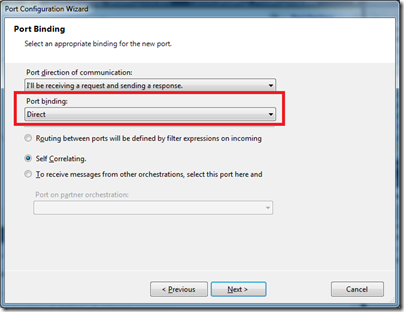

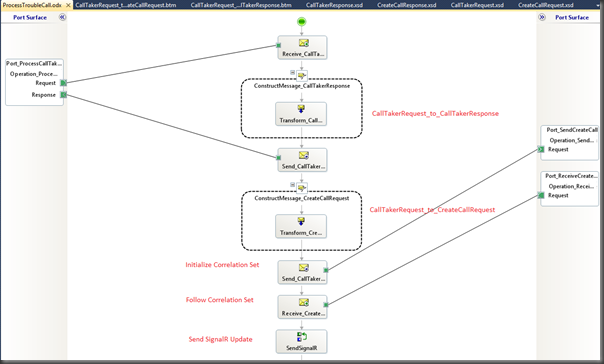

- We now need an Orchestration to tie all of these artifacts together. The Orchestration is pretty straight forward. However, as I mentioned in the CreateCall schemas that we have promoted the msgid element. The reason for this is that when we receive the message back from OMS system that we want it to match up with the same Request instance that was sent to OMS. To support this we need to create a CorrelationType and CorrelationSet.

![image image]()

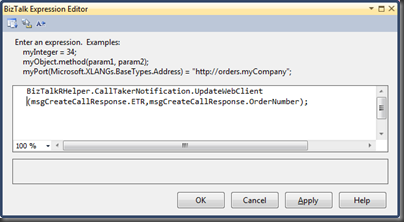

The final Expression shape, identified by ‘Send SignalR Update’ is of particular interest to us since we will need to call a helper method that will send our update to our Web Application via that SignalR API.

![image image]()

This is a good segway into diving into the C# Class Library Project called BizTalkRHelper.

BizTalkRHelperProject

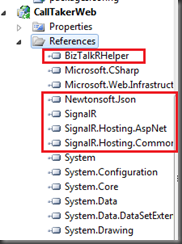

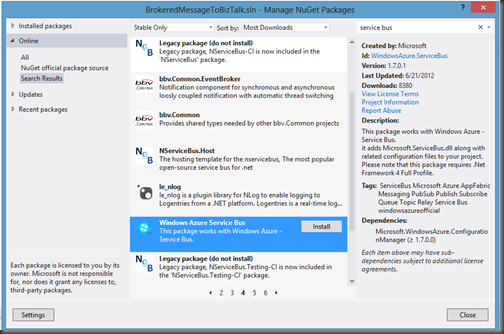

Since we are going to start interfacing with SignalR within this project, we are going to need a few project references which we can get from NuGet. Although, please recall that we need a signed SignalR.Client assembly so we will need to compile this source code and then use a Strong Name key. This can be the same key as the one that was used in the BizTalk project. As I mentioned before, we need to GAC this assembly, hence us requiring the Strong Name Key. We will also need to GAC the Newtonsoft.Json assembly but this does not require any additional signing on our part.

Otherwise we can use the assemblies that are provided as part of the NuGet packages.

![image image]()

This project includes two classes:

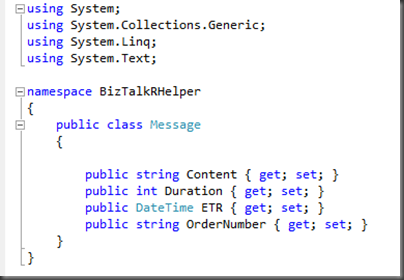

- Message – This class is used as our strongly typed message that we will send to our web app.

![image image]()

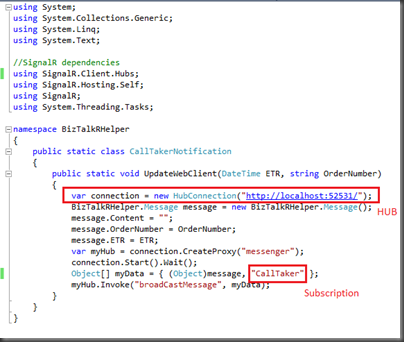

- CallTakerNotification – Within this class we will establish a connection to our HUB, construct an instance of our message that we want to send our client, provide the name of what you can think of as subscription and then send the message. Obviously in a real world scenario hardcoding this URI is not a good idea. You may also recognize that this is the method that we are going to be calling from BizTalk as we are providing the Estimated Time of Restore (ETR) and our OrderNumber that we received from our OMS system. This is why we identified these elements in the CreateCallResponse message as being distinguished. This also means that our BizTalk project will require a reference to this BizTalkRHelper project so that we can call this assembly from our Orchestration.

![image image]()

CallTakerWeb Project

This project will be used to store our Web Application artifacts. Once again with this project we need to get the SignalR dependencies. I suggest using NuGet and search for SignalR.

![image image]()

Next, we need to add a couple classes to our project. These classes are really where the “heavy lifting” is performed. I use the term “heavy” lightly considering how few lines of code that we are actually writing vs the functionality that is being provided. Note: I can’t take credit for these two classes as I have leveraged the following post: http://65.39.148.52/Articles/404662/SignalR-Group-Notifications.

- Messenger – Provides helper methods that will allow us to:

- Get All Messages

- Broadcast a message

- Get Clients

using System;

using System.Collections.Generic;

using System.Linq;

using System.Web;

using System.Collections.Concurrent;

using SignalR;

namespace CallTakerWeb

{

public class Messenger

{

private readonly static Lazy<Messenger> _instance = new Lazy<Messenger>(() => new Messenger());

private readonly ConcurrentDictionary<string, BizTalkRHelper.Message> _messages =

new ConcurrentDictionary<string, BizTalkRHelper.Message>();

private Messenger()

{

}

/// <summary>

/// Gets the instance.

/// </summary>

public static Messenger Instance

{

get

{

return _instance.Value;

}

}

/// <summary>

/// Gets all messages.

/// </summary>

/// <returns></returns>

public IEnumerable<BizTalkRHelper.Message> GetAllMessages()

{

return _messages.Values;

}

/// <summary>

/// Broads the cast message.

/// </summary>

/// <param name="message">The message.</param>

public void BroadCastMessage(Object message, string group)

{

GetClients(group).add(message);

}

/// <summary>

/// Gets the clients.

/// </summary>

/// <returns></returns>

private static dynamic GetClients(string group)

{

var context = GlobalHost.ConnectionManager.GetHubContext<MessengerHub>();

return context.Clients[group];

}

}

}

- MessengerHub – Is used to:

- Initialize an instance of our Hub

- Add to a new group

- Get All Messages

- Broadcast a message to a group

using System;

using System.Collections.Generic;

using System.Linq;

using System.Web;

using SignalR.Hubs;

using BizTalkRHelper;

namespace CallTakerWeb

{

[HubName("messenger")]

public class MessengerHub : Hub

{

private readonly Messenger _messenger;

public MessengerHub() : this(Messenger.Instance) { }

/// <summary>

/// Initializes a new instance of the <see cref="MessengerHub"/> class.

/// </summary>

/// <param name="messenger">The messenger.</param>

public MessengerHub(Messenger messenger)

{

_messenger = messenger;

}

public void AddToGroup(string group)

{

this.Groups.Add(Context.ConnectionId, group);

}

/// <summary>

/// Gets all messages.

/// </summary>

/// <returns></returns>

public IEnumerable<BizTalkRHelper.Message> GetAllMessages()

{

return _messenger.GetAllMessages();

}

/// <summary>

/// Broads the cast message.

/// </summary>

/// <param name="message">The message.</param>

public void BroadCastMessage(Object message, string group)

{

_messenger.BroadCastMessage(message, group);

}

}

}

With our SignalR plumbing out of the way, we need to make some changes to our Site.Master page. Since I am using the default Web Application project, it uses a Site.Master template. We need to include some script references to some libraries. By placing them here we only need to include them once and can use them on any other page that utilizes the Site.Master template.

<script src="Scripts/jquery-1.6.4.min.js" type="text/javascript"></script>

<script src="Scripts/BizTalkRMessengerHub.js" type="text/javascript"></script>

<script src="Scripts/jquery.signalR-0.5.2.js" type="text/javascript"></script>

<script src="../signalr/hubs"></script>

You may not recognize the second reference(BizTalkRMessengerHub.js) nor should you since it is custom. I will further explore this file in a bit.

Next we want to modify the Default.aspx page. We want to include some <div> tags so that we have placeholders for content that we will update via JQuery when we receive the message from BizTalk.

We also want to include a label called lblResults. We will update this label based upon the acknowledgement that we receive back from BizTalk

<div class="callTakerDefault" id="callTaker" ></div>

<asp:Label ID="lblResults" runat="server" Text=""></asp:Label>

<div id="orderUpdate"> </div>

<div id="etr"> </div>

<div id="orderNumber"></div>

<br />

<h2>Please provide Customer details</h2>

<table>

<tr>

<td>Customer Name: <asp:TextBox ID="txtCustomer" runat="server"></asp:TextBox></td>

</tr>

<tr>

<td>Phone Number: <asp:TextBox ID="txtPhoneNumber" runat="server"></asp:TextBox> </td>

</tr>

<tr>

<td>Customer Site ID: <asp:TextBox ID="txtCustomerSiteID" runat="server"></asp:TextBox></td>

</tr>

<tr>

<td>Comments: <asp:TextBox ID="txtComments" runat="server"></asp:TextBox></td>

</tr>

</table>

<asp:Button ID="Button1" runat="server" Text="Submit" onclick="Button1_Click" /><br />

The last piece of the puzzle is the BizTalkRMessengerHub.js file that I briefly mentioned. Within this file we will establish a connection to our hub, add ourselves to the CallTaker subscription and then get all related messages.

When we receive a message, we will use JQuery to update our div tags that we have embedded within our Default.aspx page. We want to provide information like the Estimated Time of Restore and the Order Number that the OMS system provided.

$(function () {

var messenger = $.connection.messenger // generate the client-side hub proxy { Initialized to Exposed Hub }

function init() {

messenger.addToGroup("CallTaker");

return messenger.getAllMessages().done(function (message) {

});

}

messenger.begin = function () {

$("#callTaker").html('Call Taker Notification System is ready');

};

messenger.add = function (message) {

//update divs

$("#orderUpdate").html('Order has been updated');

$("#etr").html('Estimated Time of restore is: ' + message.ETR);

$("#orderNumber").html('Order Number: ' + message.OrderNumber);

//Set custom backgrounds

$("#orderUpdate").toggleClass("callTakerGreen");

$("#etr").toggleClass("callTakerGreen");

$("#orderNumber").toggleClass("callTakerGreen");

};

// Start the Connection

$.connection.hub.start(function () {

init().done(function () {

messenger.begin();

});

});

});

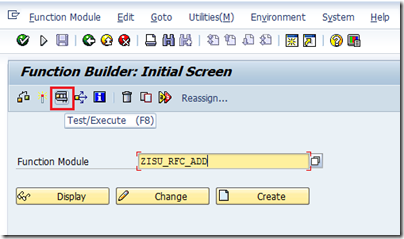

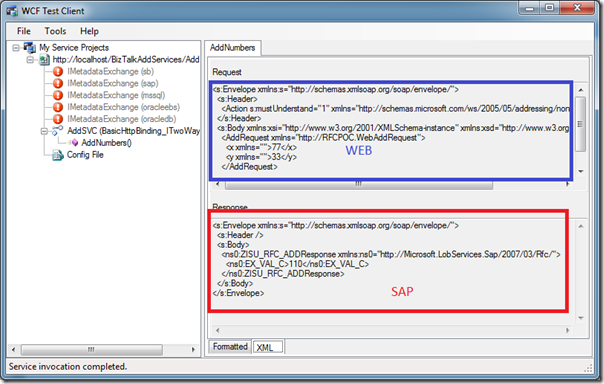

Testing the Application

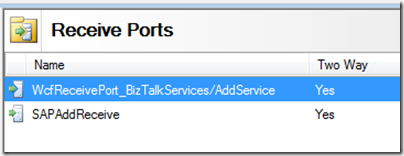

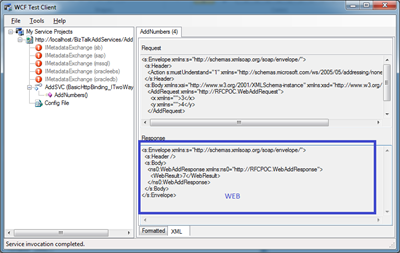

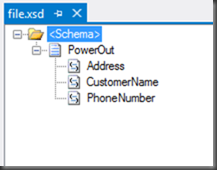

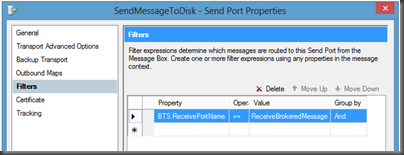

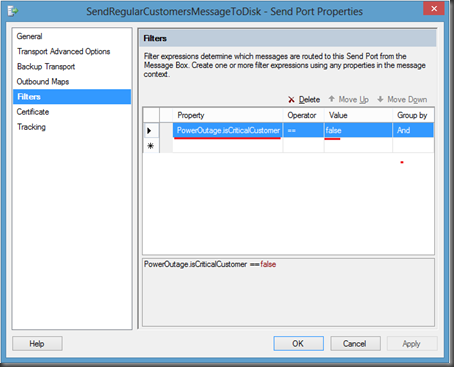

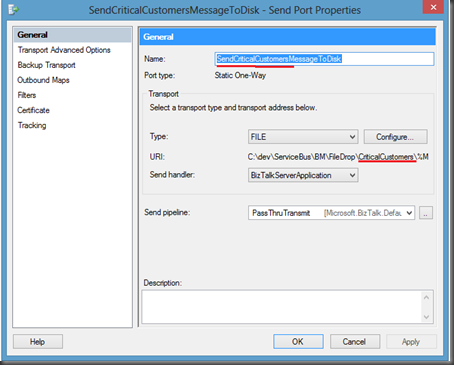

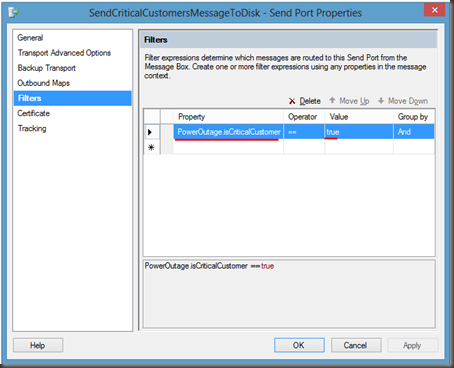

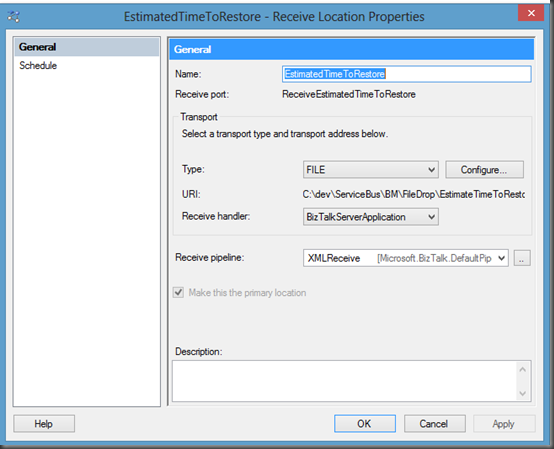

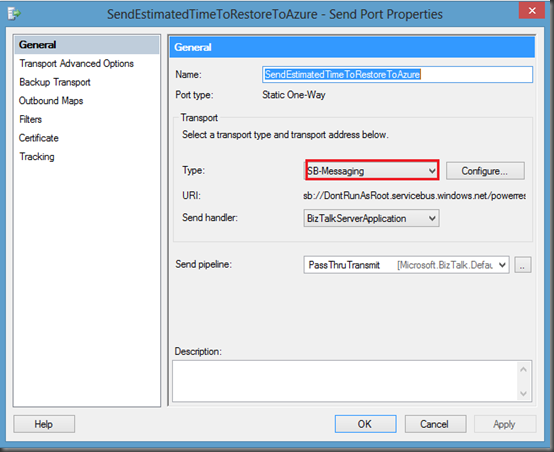

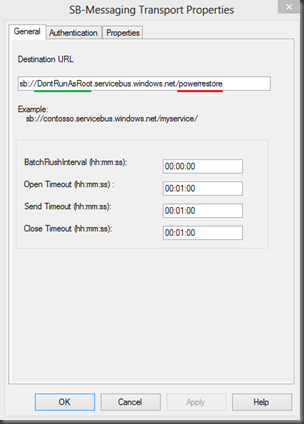

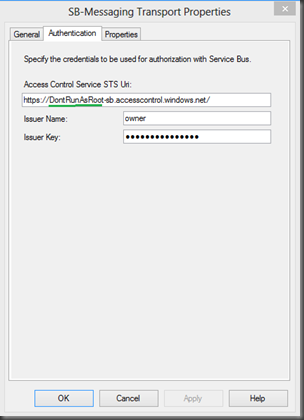

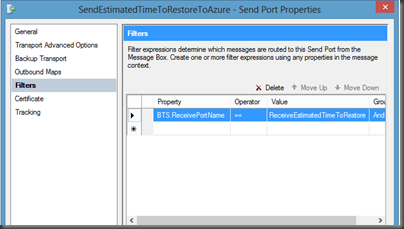

So once we have deployed our BizTalk application and configured our Send and Receive Ports we are ready to start testing. To do so we will:

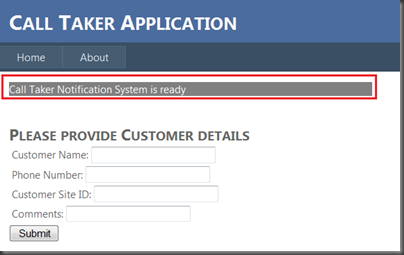

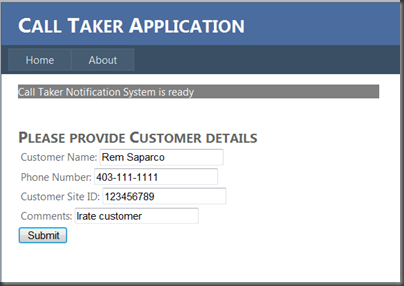

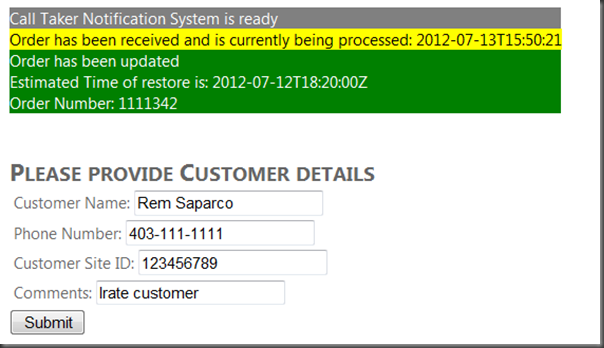

- Launch our Web Application. The first thing that you may notice is that we have a <div> update indicating that our Notification System is ready. What this means is that our browser has created a connection to our Hub and is now listening for messages. This functionality was included in the JavaScript file that we just discussed.

![image image]()

- Next we will populate the Customer form providing their details and then click the Submit button.

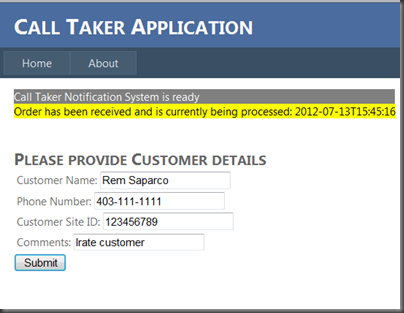

![image image]()

- Once the button has been pressed we should receive an acknowledgement back from BizTalk and we will update the results label indicating that the Order has been received and that it is currently being processed.

![image image]()

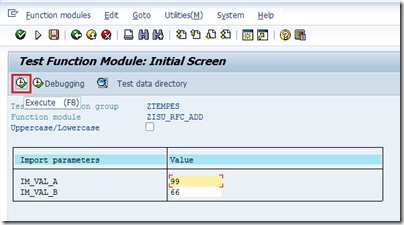

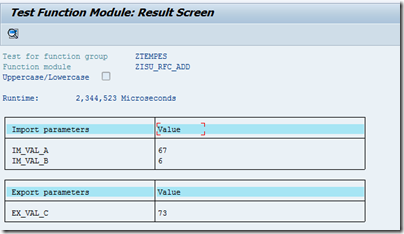

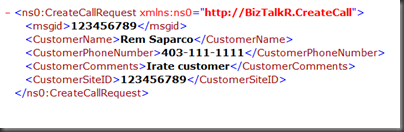

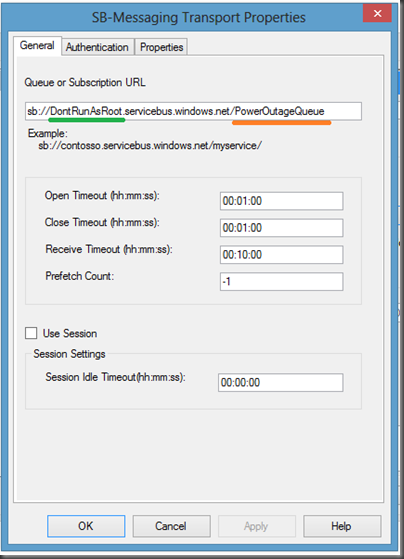

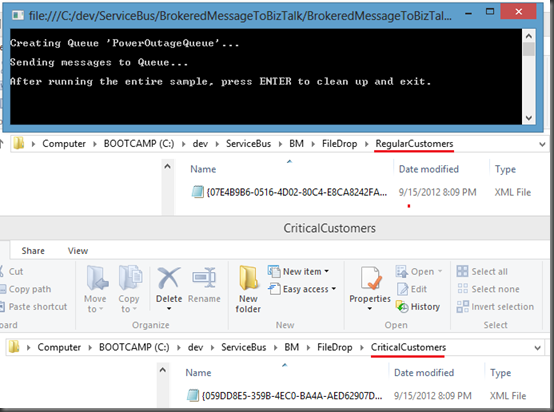

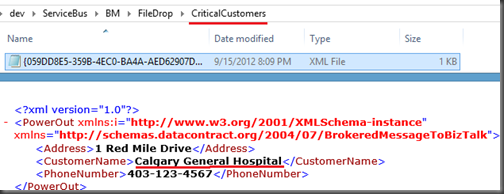

- You may recall that at this point we will start sending messages Asynchronously with the OMS system. For the purpose of this blog post I am just using the FILE Adapter to communicate with the File System. When I navigate to the folder that is specified in my Send Port, I see a newly created file with the following contents:

![image image]()

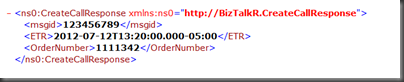

- Ordinarily, the OMS system would send back an Acknowledgement message automatically but for this post, I am just going to mock one up and place it in the folder that my Receive Location is expecting. You will notice that I am also using the same msgid to satisfy my Correlation Set criteria.

![image image]()

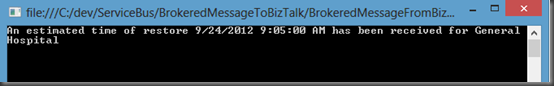

- When BizTalk processes the CreateCallResponse, it will invoke our SignalR helper and a message will be sent to our Web Browser and it will subsequently be updated without any post backs or browser refreshes. Below you will see 3 div tags being updated with this information that was passed from BizTalk.

![image image]()

Conclusion

At this point I hope that you are impressed with SignalR. I find it pretty amazing that we have other systems like BizTalk sending messages to our Web Application asynchronously without having the browser to be posted back or refreshed. I also think that this technology is a great way to bridge different synchronous/asynchronous messaging patterns.

I hope that I have provided a practical scenario that demonstrates how these two technologies can complement each other to provide a great user experience to end users. We are seriously considering using this type of pattern in an upcoming project. Since this was really my introduction to the technology and I do have some exploring to do but so far I am very happy with the results.