I have recently made it home from a great week at Redmond’s Microsoft campus where I attended the Integrate 2014 event. I want to take this opportunity to thank both Microsoft and BizTalk360 for being the lead sponsors and organizers of the event.

![image image]()

![image image]()

I also want to call out to the other sponsors as these events typically do not take place without this type of support. I think it also a testament of just how deep Microsoft’s partner ecosystem really is and it was a pleasure to interact with you over the course of the week.

Speaking at the event

I want to thank Microsoft and BizTalk360 for inviting me to speak at this event. This was the first time that I have had the chance to present at Microsoft’s campus and it was an experience I don’t think I will ever forget. I have been to Microsoft campus probably around 20 times for various events but have never had the opportunity to present. It was a pretty easy decision.

One of the best parts of being involved in the Microsoft MVP program is the international network that you develop. Many of us have been in the program for several years and really value each other’s experience and expertise. Whenever we get together, we often compare notes and talk about the industry. We had a great conversation about the competitive landscape. We also discussed the way that products are being sold with a lot of buzzwords and marketecture. People were starting to get caught up in this instead of focusing on some of the fundamental requirements. Much like any project should be based upon a formal, methodical, requirements driven approach, so should buying an integration platform.

These concepts introduced the idea of developing a whitepaper where we would identify requirements “if I was buying” an integration platform. Joining me on this journey was Michael Stephenson and Steef-Jan Wiggers. We focused on both functional and nonfunctional requirements. We also took this opportunity to rank the Microsoft platform, which includes BizTalk Server, BizTalk Services, Azure Service Bus and Azure API Management. Our ranking was based upon experiences with these tools and how our generic integration requirements could be met by the Microsoft stack. This whitepaper is available on the BizTalk360 site for free. Whether your are a partner, system integrator, integration consultant or customer you are welcome to use and alter as you see fit. If you feel we have missed some requirements, you are encouraged to reach out to us. We are already planning a 1.1 version of this document to address some of the recent announcements from the Integrate event.

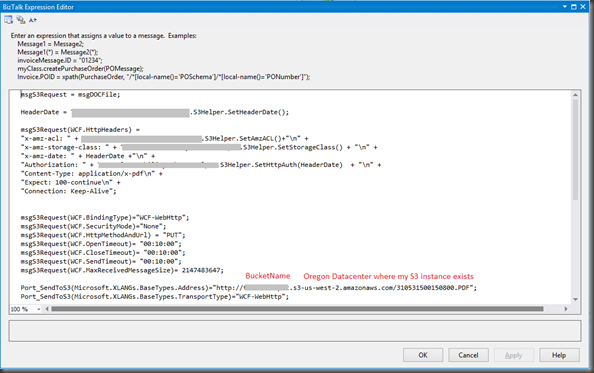

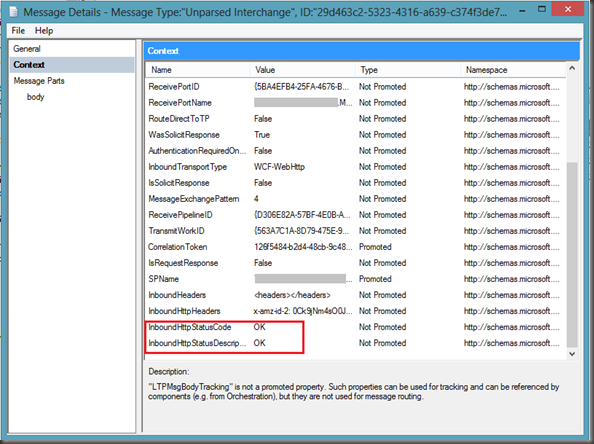

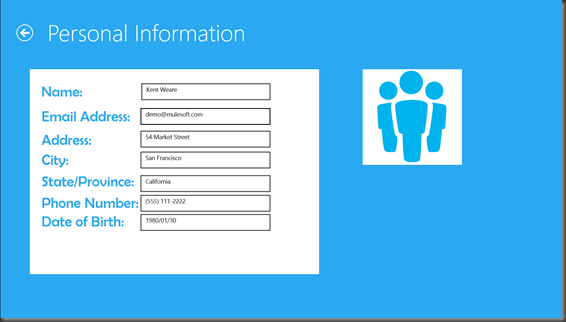

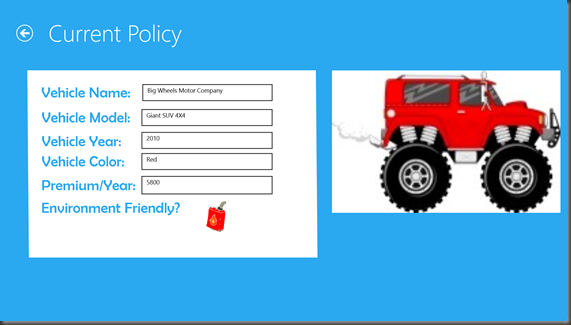

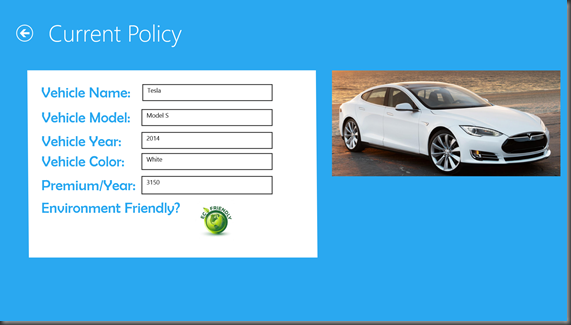

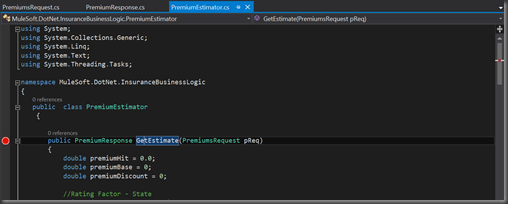

My presentation focused on 10 of the different requirements that were introduced in the paper. I also included a ‘Legacy Modernization’ demo that highlights Microsoft’s ability to deliver on some of the requirements that were discussed in the whitepaper. This session was recorded and will be published on the BizTalk360 site in the near future.

![]()

Recap

Disclaimer: What I am about to discuss is all based upon public knowledge that was communicated during the event. I have been careful to ensure what is described is accurate to the best of my knowledge. It was a fast and furious 3 days with information moving at warp speed. I have also included some of my own opinions which may or may not be inline with Microsoft’s way of thinking. For some additional perspectives, I encourage you to check out the following blog posts from the past week:

Event Buildup

There was a lot of build up to this event, with Integration MVPs seeing some early demos there was cause for a lot of excitement. This spilled over to twitter where @CrazyBizTalk posted this prior to the event kicking off. The poster(I know who you are ![Smile]() ) was correct, there has never been so much activity on twitter related to Microsoft Integration. Feel free to check out the timeline for yourself here.

) was correct, there has never been so much activity on twitter related to Microsoft Integration. Feel free to check out the timeline for yourself here.

![Embedded image permalink]()

Picture Source @CrazyBizTalk

Keynote

The ever so popular Scott Guthrie or otherwise known as “Scott Gu” kicked off the Integrate 2014 event. Scott is the EVP of Microsoft’s Cloud and Enterprise groups. He provided a broad update on the Azure platform describing all of the recent investments that have been rolled out.

Picture Source @SamVanhoutte

![Embedded image permalink]()

Some of the more impressive points that Scott made about Azure include:

- Azure Active Directory supports identity federation with 2342 SaaS platforms

- Microsoft Azure is the only cloud provider in all 4 Gartner magic quadrants

- Microsoft Azure provides the largest VMs in the cloud known as ‘G’ Machines (for Godzilla). These VMs support 32 cores, 448 GB of Ram and 6500 GB of SSD Storage

- Microsoft is adding 10 000+ customers per week to Microsoft Azure

For some attendees, I sensed some confusion about why there would be so much emphasis on Microsoft Azure. In hindsight, it makes a lot of sense. Scott was really setting the stage for what would be come a conference that focused on a cohesive Azure platform where BizTalk becomes one of the center pieces.

![Embedded image permalink]()

Picture Source @gintveld

A Microservices platform is born

Next up was Bill Staples. Bill is the General Manager for the Azure Application Platform or what is also known as “Azure App Platform”. Azure App Platform is the foundational ‘fabric’ that currently enables a lot of Azure innovation and will fuel the next generation integration tools for Microsoft.

A foundational component of Azure App Platform is App Containers. These containers support many underlying Azure technologies that enable:

- > 400k Apps Hosted

- 300k Unique Customers

- 120% Yearly Subscription Growth

- 2 Billion Transactions daily

Going forward we can expect BizTalk ‘capabilities’ to run inside these containers. As you can see, I don’t think we will have any performance constraints.

![Embedded image permalink]()

Picture Source @tomcanter

Later in the session, it was disclosed that Azure App Platform will enable new BizTalk capabilities that will be available in the form of Microservices. Microservices will enable the ability provide service composition in a really granular way. We will have the ability to ‘chain’ these Microservices together inside of a browser(at design time), while enjoying the benefits of deploying to an enterprise platform that will provide message durability, tracking, management and analytics.

I welcome this change. The existing BizTalk platform is very reliable, robust, understood, and supported. The challenge is that the BizTalk core, or engine, is over 10 years old and the integration landscape has evolved with BizTalk struggling to maintain pace.

BizTalk capabilities exposed as Microservices puts Microsoft in the forefront of integration platforms leapfrogging many innovative competitors. It allows Microsoft’s customers to enable transformational scenarios for their business. Some of the Microservices that we can expect to be part of the platform include:

- Workflow (BPM)

- SaaS Connectivity

- Rules (Engine)

- Analytics

- Mapping (Transforms)

- Marketplace

- API Management

![Embedded image permalink]()

Picture Source @jeanpaulsmit

We can also see where Microsoft is positioning BizTalk Microservices within this broader platform:

![Embedded image permalink]()

Picture Source @wearsy

What is exciting about this is new platform is the role that BizTalk now plays in the broader platform. For a while now, people have felt that BizTalk is that system that sits in the corner that people do not like to talk about. Now, BizTalk is a key component within the App Platform that will enable many integration scenarios including new lightweight scenarios that has been challenging for BizTalk Server to support in the past.

Whenever there is a new platform introduced like this, there is always the tendency to chase ‘shiny objects’ while ignoring some of the traditional capabilities of the existing platform that allowed you to gain the market share that you achieved. Microsoft seems to have a good handle on this and has outlined the Fundamentals that they are using to build this new platform. This was very encouraging to see.

![Embedded image permalink]()

Picture Source @wearsy

At this point the room was buzzing. Some people nodding their heads with delight(including myself), others struggling with the term Microservice, others concerned about existing requirements that they have and how they fit into the new world. I will now break down some more details into the types of Microservices that we can expect to see in this new platform.

Workflow Microservice

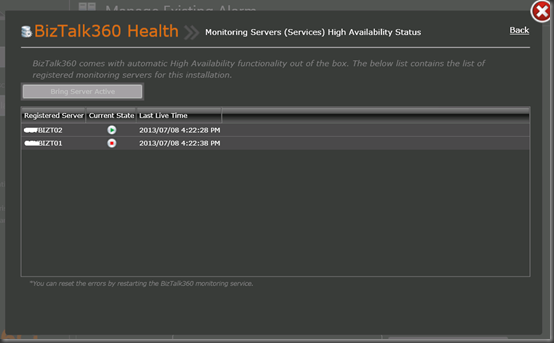

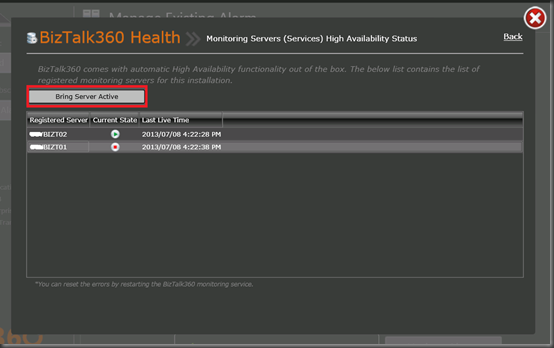

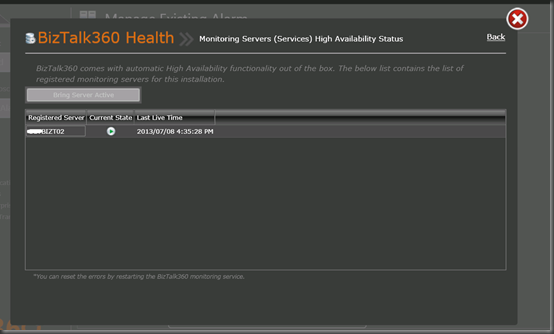

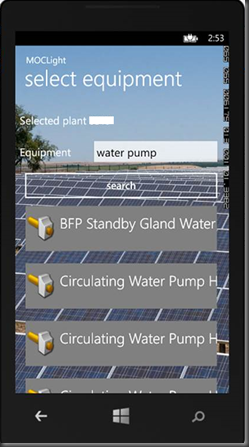

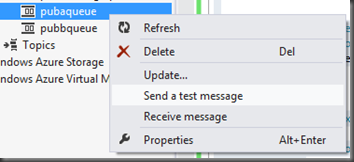

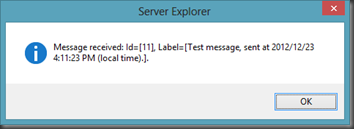

One of the current gaps in Microsoft Azure BizTalk Services (MABS) is workflow. In the following image we will see the workflow composer which is hosted inside a web browser. Within this workflow we have the ability to expose it as a Microservice, but we also have the ability to pull in other Microservices such as a SaaS connector or a Rules Service.

![Embedded image permalink]()

Picture Source @saravanamv

On the right hand corner of this screen we can see some of these Microservices that we can pull in. The picture is a little “grainy” but some of the items include:

- Validation

- Retrieve Employee Details (custom Microservice I suppose)

- Rules

- Custom Filter

- Acme (custom Microservice I suppose)

- Survey Monkey SaaS Connector)

- Email (SaaS Connector)

![Embedded image permalink]()

Picture Source (@mikaelsand)

In the demo we were able to see a Workflow being triggered and the tracking information was made available in real time. There are also an ability to schedule a workflow, run it manually or trigger it from another process.

Early in the BizTalk days there as an attempt to involve Business Analysts in the development of Workflows (aka Orchestrations). This model never really worked well as Visual Studio was just too developer focused, and Orchestration Designer for Business Analysts (ODBA) just didn’t have the required functionality for it to be a really good tool. Microsoft is once again attempting to bring the Business Analyst into the solution by providing a simple to use tool which is hosted in a Web browser. I always am a bit skeptical when companies try to enable these types of BA scenarios but I think that was primarily driven from workflows being defined in an IDE instead of a web browser.

![Embedded image permalink]()

Picture Source @wearsy

Once again, nice to see Microsoft focusing on key tenets that will drive their investment. Also glad to see some of the traditional integration requirements being addressed including:

- Persist State

- Message Assurance

- End to end tracking

- Extensibility

All too often some of these ‘new age’ platforms provide lightweight capabilities but neglect the features that integration developers need to support their business requirements. I don’t think this is the case with BizTalk going forward.

![Embedded image permalink]()

Picture Source @wearsy

SaaS Connectivity

A gap that has existed in the BizTalk Server platform is SaaS connectivity. While BizTalk does provide a WebHttp Adapter that can both expose and consume RESTful services, I don’t think it is enough (as I discussed in my talk). I do feel that providing a great SaaS connector makes developers more productive and reduces the time to deliver projects is mandatory. Delivering value quicker is one of the reasons why people buy Integration Platforms and subsequently having a library that contains full featured, stable connectors for SaaS platforms is increasingly becoming important. I relate the concept of BizTalk SaaS connectors to Azure Active Directory Federations. That platform boasts more than 2000+ ‘identity adapters”. Why should it be any different for integration?

The following image is a bit busy, but some of the Connector Microservices we can expect include:

- Traditional Enterprise LOBs

- Dynamics CRM Online

- SAP SuccessFactors

- Workday

- SalesForce

- HDInsight

- Quickbooks

- Yammer

- Dynamics AX

- Azure Mobile Services

- Office 365

- Coupa

- OneDrive

- SugarCRM

- Informix

- MongoDB

- SQL Azure

- BOX

- Azure Blobs and Table

- ….

This list is just the beginning. Check out the Marketplace section in this blog for more announcements.

![Embedded image permalink]()

Picture Source @wearsy

Rules Microservice

Rules (Engines) are a component that shouldn’t be overlooked when evaluating Integration Platforms. I have been at many organizations where ‘the middleware should not contain any business rules’. While in principle, I do agree with this approach. However, it is not always that easy. What do you do in situations where you are integrating COTS products that don’t allow you to customize? Or there may be situations where you can customize, but do not want to as you may lose your customizations in a future upgrade. Enter a Rules platform.

The BizTalk Server Rules Engine is a stable and good Rules Engine. It does have some extensibility and can be called from outside BizTalk using .NET. At times it has been criticized as being a bit heavy and difficult to maintained. I really like where Microsoft is heading with its Microservice implementation that will expose “Rules as a Service” (RaaS? - ok I will stop with that). This allows integration interfaces to leverage this Microservice but also allows other applications such as a Web or Mobile applications to leverage. I think there will be endless opportunities for the broader Azure ecosystem to leverage this capability without introducing a lot of infrastructure.

![Embedded image permalink]()

Picture Source @wearsy

Once again, Microsoft is enabling non-developers to participate in this platform. I think a Rules engine is a place where Business Analysts should participate. I have seen this work on a recent project with Data Quality Services (DQS) and don’t see why this can’t transfer to the Rules Microservice.

![Embedded image permalink]()

Picture Source @wearsy

Data Transformation

Another capability that will be exposed as a Microservice is Data Transformation (or mapping). This is another capability that will exist in a Web browser. If you look closely on the following image you will discover that we will continue to have what appears to be a functoid (or equivalent).

Only time will tell if a Web Browser will provide the power to build complex Maps. One thing that BizTalk Server is good at is dealing with large and complex maps. The BizTalk mapping tools also provide a lot of extensibility through managed code and XSLT. We will have to keep an eye on this as it further develops.

![image image]()

Analytics

Within BizTalk Server we have Business Activity Monitoring (BAM). It is a very powerful tool but has been accused of being too heavy at times. One of the benefits of leveraging the power of Azure is that we will be able to plug into all of those other investments being made in this area.

While there was not a lot of specifics related to Analytics I think it is a pretty safe bet that Microsoft will be able to leverage their Power BI suite which is making giant waves in the industry.

One interesting demo they did show us was using Azure to consume SalesForce data and display it into familiar Microsoft BI tools.

I see a convergence between cloud based integration, Internet of Things (IoT), Big Data and Predictive analytics. Microsoft has some tremendous opportunities in this space as they have very competent offerings in each of these areas. If Microsoft can find a way to ‘stitch’ them all together they were will be some amazing solutions developed.

![]()

Picture Source @wearsy

Below is a Power BI screen that displays SalesForce Opportunities by Lead Source.

![]()

Picture Source @wearsy

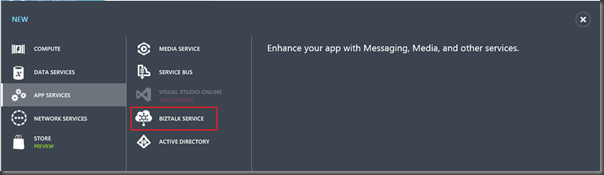

Marketplace - Microservice Gallery

Buckle your seatbelts for this one!

Azure already has a market place appropriately called Azure Marketplace. In this Marketplace you can leverage 3rd party offerings including:

- Data services

- Machine Learning

- Virtual Machines

- Web applications

- Azure Active Directory applications

- Application services

You can also expect a Microservice Gallery to be added to this list. This will allow 3rd parties to develop Microservices and add them to the Marketplace. These Microservices can be monetized in order to develop a healthy eco-system. At the beginning of this blog post you saw a list of Microsoft partners who are active in the existing Integration eco-system. Going forward you can expect these partners + other Azure partners and independent developers building Microservices and publishing them to to this Marketplace.

In the past there has been some criticism about BizTalk being too .Net specific and not supporting other languages. Well guess what? Microservices can be built using other languages that are already supported in Azure including:

- Java

- Node.js

- PHP

- Python

- Ruby

This means that if you wanted to build a Microservice that talks to SaaS application ‘XYZ” that you could build it in one of this languages and then publish it to the Azure Marketplace. This is groundbreaking.

The image below describes how a developer would go ahead and publish their Microservice to the gallery through a wizard based experience.![Embedded image permalink]()

Picture Source @wearsy

Another aspect of the gallery is the introduction of templates. Templates are another artifact that 3rd parties can publish and contribute. Knowing the very large Microsoft ISV community with a lot of domain expertise this has the potential to be very big.

Some of the examples that were discussed include:

- Dropbox – Office365

- SurveyMonkey – SalesForce

- Twitter – SalesForce

With a vast amount of Connector Microservices, the opportunities are endless. I know a lot of the ISVs in the audience were very excited to hear this news and were discussing what templates they are going to build first.

![Embedded image permalink]()

Picture Source @nickhauenstein

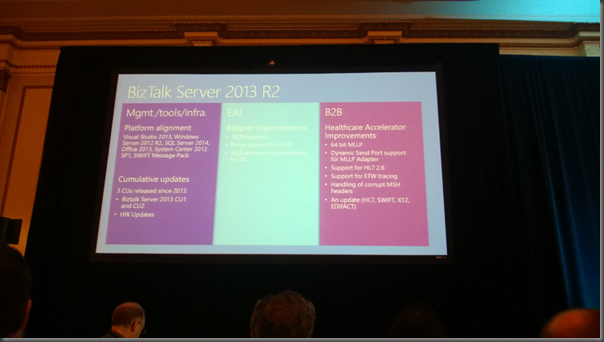

What about BizTalk Server?

Without question, a lot of attendees are still focused on On-Premises integration. This in part due to some of the conservative domains that these people support. Some people were concerned about their existing investments in BizTalk Server. Microsoft confirmed (again) their commitment to these customers. You will not be left behind! On the flipside, I don’t think you can expect a lot of innovation in the traditional On-Premises product but you will be supported and new versions will be released including BizTalk Server 2015.

You can also expect every BizTalk Server capability to be made available as a Microservice in Azure. Microsoft has also committed to providing a great artifact migration experience that allows customers to transition into this new style of architecture.

![Embedded image permalink]()

Picture Source @wearsy

Conclusion

If there is one thing that I would like you to take away from this post it is the “power of the Azure platform”. This is not the BizTalk team working in isolation to develop the next generation platform. This is the BizTalk team working in concert with the larger Azure App Platform team. It isn’t only the BizTalk team participating but other teams like the API Management team, Mobile Services team, Data team and many more I am sure.

In my opinion, the BizTalk team being part of this broader team and working side by side with them, reporting up the same organization chart is what will make this possible and wildly successful.

Another encouraging theme that I witnessed was the need for a lighter weight platform without compromising Enterprise requirements. When you look at some of the other platforms that allow you to build interfaces in a web browser, this is what they are often criticized for. With Microsoft having such a rich history in Integration, they understand these use cases as well as anyone in the industry.

Overall, I am extremely encouraged with what I saw. I love the vision and the strategy. Execution will become the next big challenge. Since there is a very large Azure App Platform team providing a lot of the foundational platform, I do think the BizTalk team has the bandwidth, talent and vision to bring the Integration specific Microservices to this amazing Azure Platform.

In terms of next steps, we can expect a public preview of Microservices (including BizTalk) in Q1 of 2015. Notice how I didn’t say a BizTalk Microservices public preview? This is not just about BizTalk, this about a new Microservice platform that includes BizTalk. As soon as more information is publicly available, you can expect to see updates on this blog.